Some years don’t just pass by — they transform you. 🔅

That is what 2025 has been..

Not every transformation is loud or obvious.

💫 Some are visible — new places, new roles, new beginnings.

🌱 Others happen quietly —

in how you think, what you value, how you observe life, what you let go of, and what you finally choose for yourself.

🌾 Growth doesn’t always announce itself. Sometimes it arrives quietly — and stays forever.

As this year comes to a close, I feel nothing but gratitude.

Gratitude for the people I met, the conversations that shaped me, the lessons that humbled me, the patience that I found in me, and the strength I discovered within myself.

Thank you, 2025, for everything you were —

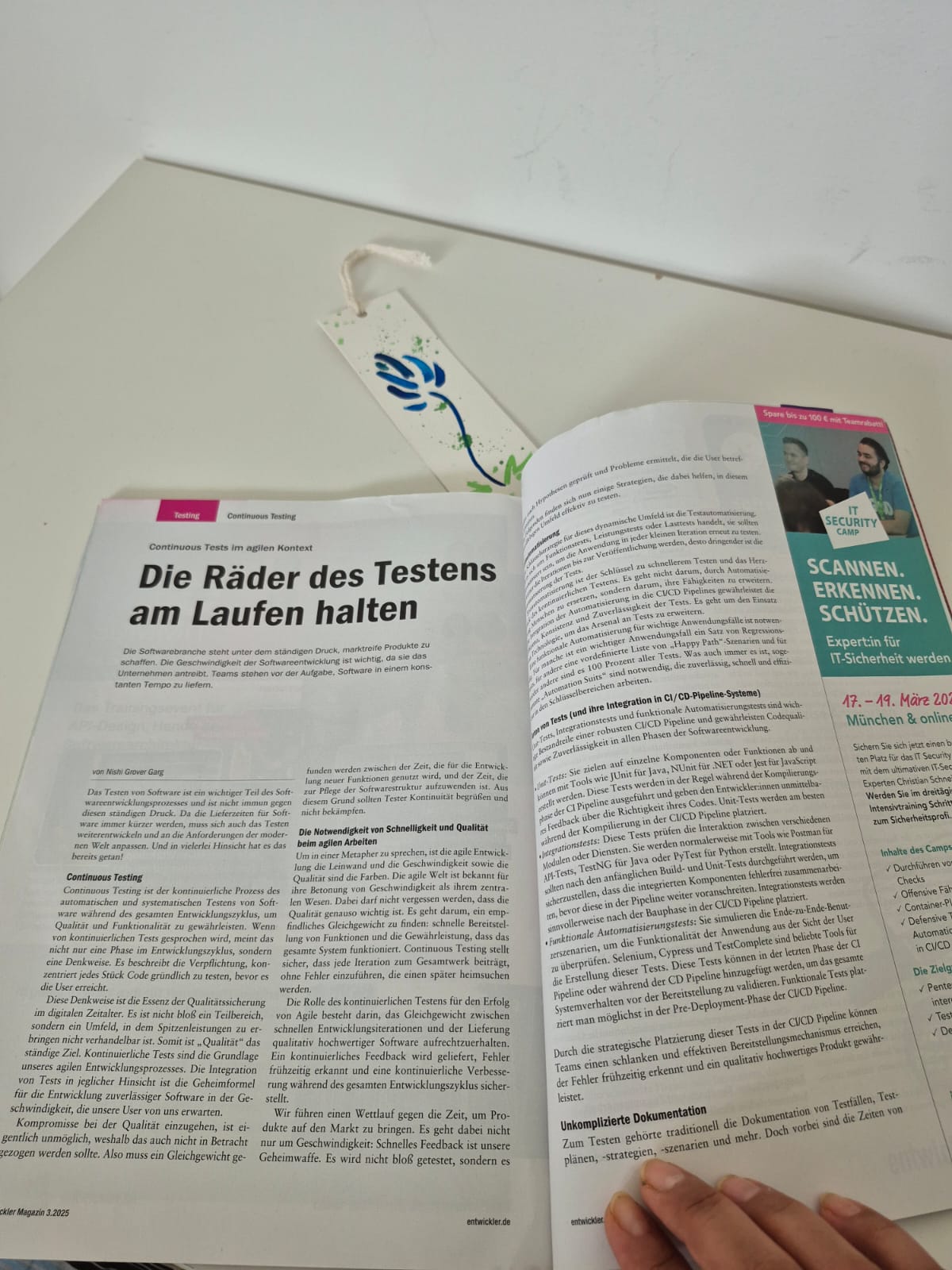

for the dreams that your brought down to reality,

for the opportunities that came my way,

for the challenges that built resilience,

for the changes that brought clarity,

and for the journey that made me more Me!

As we close down the year, it might be a good time to reflect, find things to be grateful for, and instead of setting some new year resolutions, perhaps find some systems to place within our lives – to be better at being ourselves!

Let us step into the next year more hopeful, grounded, and open — trusting the timing, the process, and the possibilities ahead. 🌟

Here’s to what’s next. 💫

#2025Reflection #YearInReview #Grateful #GratefulHeart #TransformationJourney #LifeTransitions #CareerGrowth #NewBeginnings #TrustTheProcess #LifeLessons #MondayMusings #YearEnd #YearEndReflection #PersonalGrowth #MovingForward #GrowthMindset #WhatALesson